Most of the developers do not use cached_property and lru_cache from functools standard library but also does not cache HTTP request/response into outside file/database. Example in this article are tested under Python 3.8

Usage functools.cached_property

Let say you have an intensive calculation. It takes time and CPU usage. It happens all the time. There is a need to calculate some values for webshop each time the client access site.

Example usage of cached_property:

from functools import cached_property

import statistics

from time import time

class DataSet:

def __init__(self, sequence_of_numbers):

self._data = sequence_of_numbers

@cached_property

def stdev(self):

return statistics.stdev(self._data)

@cached_property

def variance(self):

return statistics.variance(self._data)

numbers = range(1,10000)

testDataSet = DataSet(numbers)

start = time()

result = testDataSet.stdev

result = testDataSet.variance

end = time()

print(f"First run: {(end - start):.6f} second")

start = time()

result = testDataSet.stdev

result = testDataSet.variance

end = time()

print(f"Second run: {(end - start):.6f} second")

start = time()

result = statistics.stdev(numbers)

result = statistics.variance(numbers)

end = time()

print(f"RAW run: {(end - start):.6f} second")

Output would look similar to this:

First run: 0.247226 second

Second run: 0.000002 second

RAW run: 0.242232 second

You can run code online: Python code example IDE Online

Usage functools.lru_cache

lru_cache is a decorator that is used for function using memoizing callable that saves up to the maxsize most recent calls.

Again you have a lot of calculation and you want to save some results (the example we calculate N and N+1 we need just one step instead of re-calculating complete N+1) of early calculation that helps us to build next result with cached ones.

from functools import lru_cache

from time import time

@lru_cache(maxsize=None)

def fib(n):

if n < 2:

return n

return fib(n-1) + fib(n-2)

start = time()

result = [fib(n) for n in range(40000)]

end = time()

print(f"First run: {(end - start):.6f} second")

start = time()

result = [fib(n) for n in range(40000)]

end = time()

print(f"Second run: {(end - start):.6f} second")

start = time()

result = [fib(n) for n in range(39999)]

end = time()

print(f"Third run: {(end - start):.6f} second")

start = time()

result = [fib(n) for n in range(40001)]

end = time()

print(f"Fourth run: {(end - start):.6f} second")

print(fib.cache_info())

Output would be:

First run: 0.278697 second

Second run: 0.017155 second

Third run: 0.017530 second

Fourth run: 0.065415 second

CacheInfo(hits=199997, misses=40001, maxsize=None, currsize=40001)

The first call is cached. The second one is re-using cache, the third one is N-1 and the fourth is N+1.

As we can see in the last 3 cases - we re-use cache. This could be used for database, calculation, any CPU usage that we want to repeat or operation we want to keep in cache.

Here is an online IDE you can run and view: lru_cache example

HTTP request caching

With lru_cache we could also cache web requests for static pages. Other options are to keep the result in the file based on our input data.

Let us see first options:

from functools import lru_cache

import urllib.request

from time import time

@lru_cache(maxsize=32)

def get_pep(num):

'Retrieve text of a Python Enhancement Proposal'

resource = 'http://www.python.org/dev/peps/pep-%04d/' % num

try:

with urllib.request.urlopen(resource) as s:

return s.read()

except urllib.error.HTTPError:

return 'Not Found'

start = time()

for n in 8, 290, 308, 320, 8, 218, 320, 279, 289, 320, 9991:

pep = get_pep(n)

#print(n, len(pep))

end = time()

print(f"First run: {(end - start):.6f} second")

print(get_pep.cache_info())

print("\n")

start = time()

for n in 8, 290, 308, 320, 8, 218, 320, 279, 289, 320, 9991:

pep = get_pep(n)

#print(n, len(pep))

end = time()

print(f"Second run: {(end - start):.6f} second")

print(get_pep.cache_info())

If we run this code, we get:

First run: 0.897728 second

CacheInfo(hits=3, misses=8, maxsize=32, currsize=8)

Second run: 0.000026 second

CacheInfo(hits=14, misses=8, maxsize=32, currsize=8)

You can run this code: HTTP Caching

Now let us talk about real projects in real life. You have IP or word and you need to check or to get a replacement. But you have 2^32-1 IP or 50 million words. And you don't want to lose all information you got from these services. But caching inside of python is not enough for this. So what are we going to do? We put the result in a file or database.

Example code:

import urllib.request

from time import time

def get_pep(num):

'Retrieve text of a Python Enhancement Proposal'

resource = 'http://www.python.org/dev/peps/pep-%04d/' % num

f = ""

ff = ""

try:

f = open(str(num),"r")

txt_file = f.read()

return txt_file

# Do something with the file

except IOError:

nothing = "a"

try:

with urllib.request.urlopen(resource) as s:

ff = open(str(num),"w+")

txt = s.read()

ff.write(str(txt))

return txt

except urllib.error.HTTPError:

return 'Not Found'

start = time()

for n in 8, 290, 308, 320, 8, 218, 320, 279, 289, 320, 9991:

pep = get_pep(n)

end = time()

print(f"First run: {(end - start):.6f} second")

print("\n")

start = time()

for n in 8, 290, 308, 320, 8, 218, 320, 279, 289, 320, 9991:

pep = get_pep(n)

end = time()

print(f"Second run: {(end - start):.6f} second")

You can run code caching results from http

This code produce something similar to:

First run: 4.196623 second

Second run: 0.358382 second

Why is this better ? in short: if you have 20 million keys, words, something and you run day by day - then it is better to keep in database or files. This example (file, writing to file) is the simplest proof of concept. I am lazy to implement MySQL, PostgreSQL, or SQLite records to keep.

This is just some cheat sheet about the FFmpeg command line. To know about the history there is link.

This is just some cheat sheet about the FFmpeg command line. To know about the history there is link.

Example without and with twitter card meta tags

Example without and with twitter card meta tags Picture was taken from http://www.tankado.com

Picture was taken from http://www.tankado.com DevOpsDays Berlin 2013

DevOpsDays Berlin 2013

Godina 3032

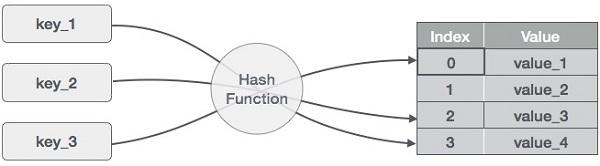

Godina 3032 Picture of a hash function is taken from www.tutorialspoint.com

Picture of a hash function is taken from www.tutorialspoint.com