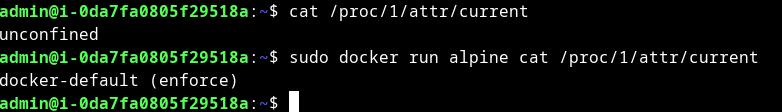

Unusual task with no points - are you in VM or Docker shell? Simple solution could be this:

Vladimir Cicovic - Security, Programming, Puzzles, Cryptography, Math, Linux

Unusual task with no points - are you in VM or Docker shell? Simple solution could be this:

SadServer Salt solution URL: https://sadservers.com/scenario/salta

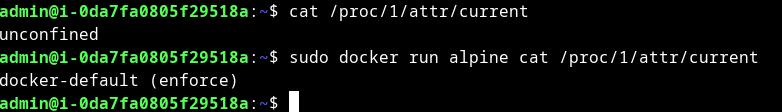

After logging into a server - notice that port 8888 is used. Missing tool lsof, I install with "sudo apt install lsof" and review what process is using port 8888. Nginx was used so I stopped the process with "sudo systemctl stop nginx"

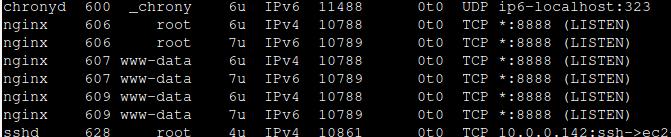

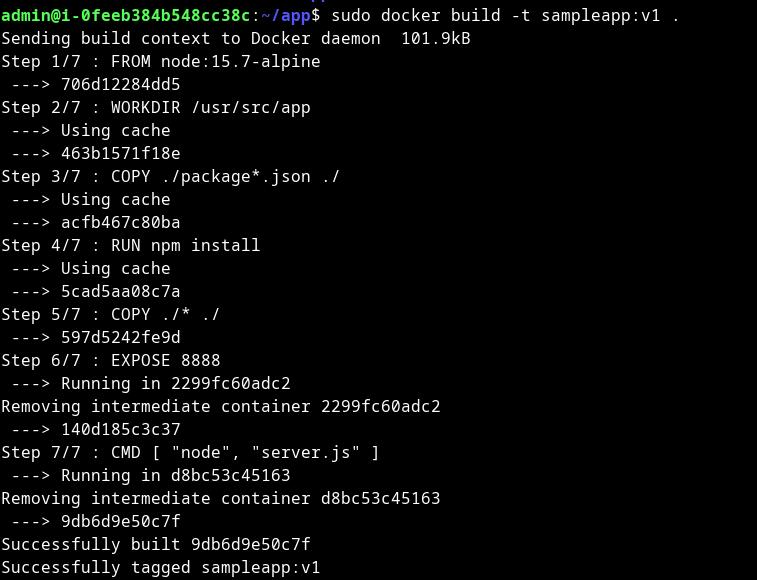

Inside of Dockerfile - found missing proper port 8888 (it was written 8880) and for CMD there was "serve.js" instead of "server.js" - a local file in the same directory.

When the fix was solved, the docker container is built with cmd: "sudo docker build -t sampleapp:v1 . "

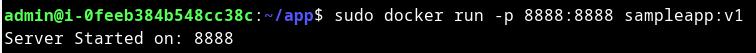

running app with "sudo docker run -p 8888:8888 sampleapp:v1" and the task is done

Solution for Cape Town task from URL: https://sadservers.com/scenario/capetown

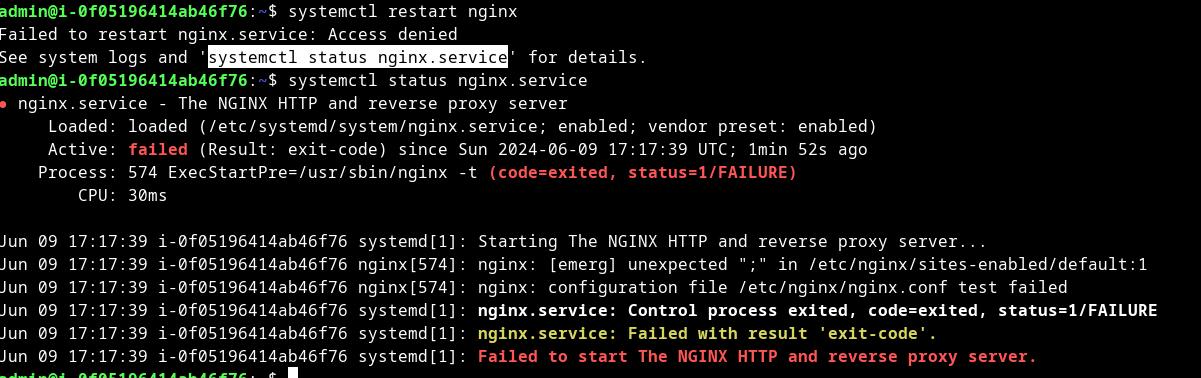

After logging into the server, there is no working nginx.

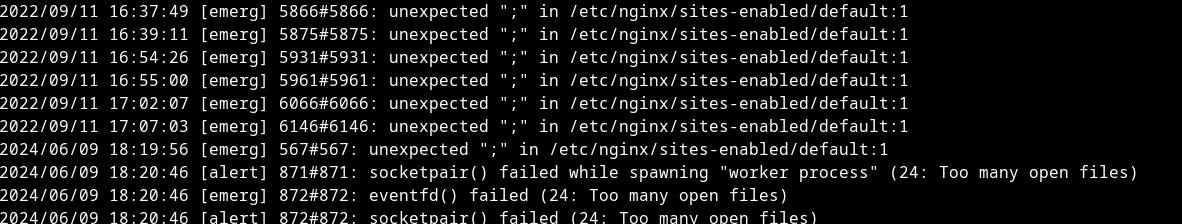

Examine details on why nginx does not work show me the first line containing ";". So I removed ";" from the nginx file and nginx does not yet work.

After examining the error log - I was able to see and spot file limits.

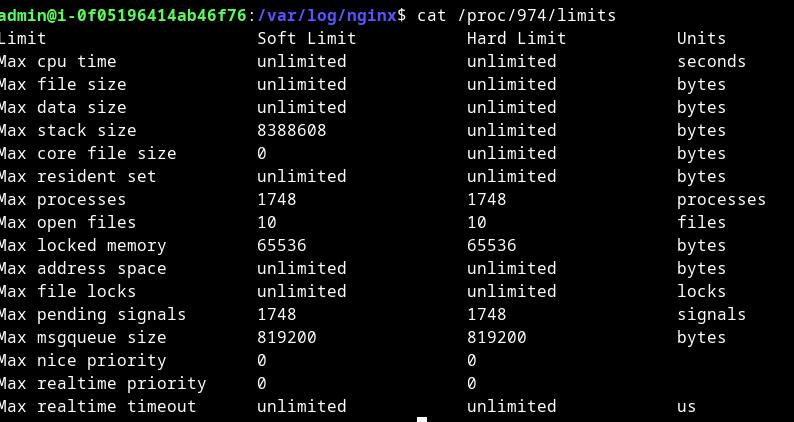

After viewing /proc/[pid]/limits - I spot this (Max open files 10)

I did check limits for user www-data, as well as other things (fs.file-max, others)

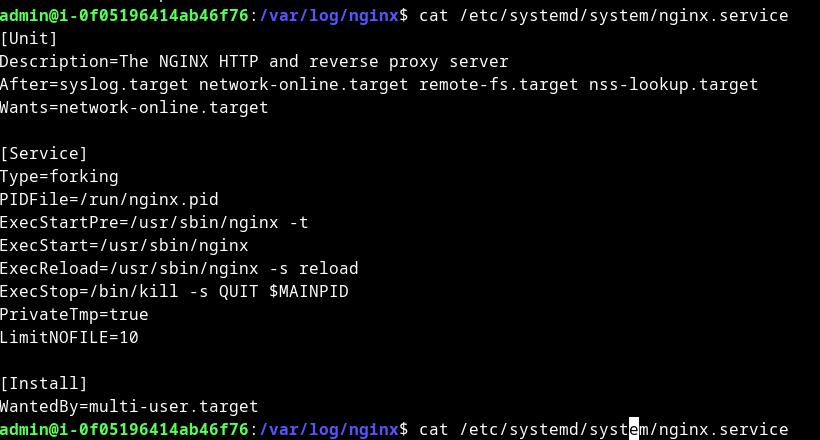

In the end - Maybe systemD has some limitations per process.

After reading the .service file from systemD

Add # on the start of the line, reload the system daemon, and restart nginx and it works!

For this task, it takes 30 min to solve